Training a Chess Board Scanner with Vision Models and Active Learning

Training a Chess Board Scanner with Vision Models and Active Learning

The Problem

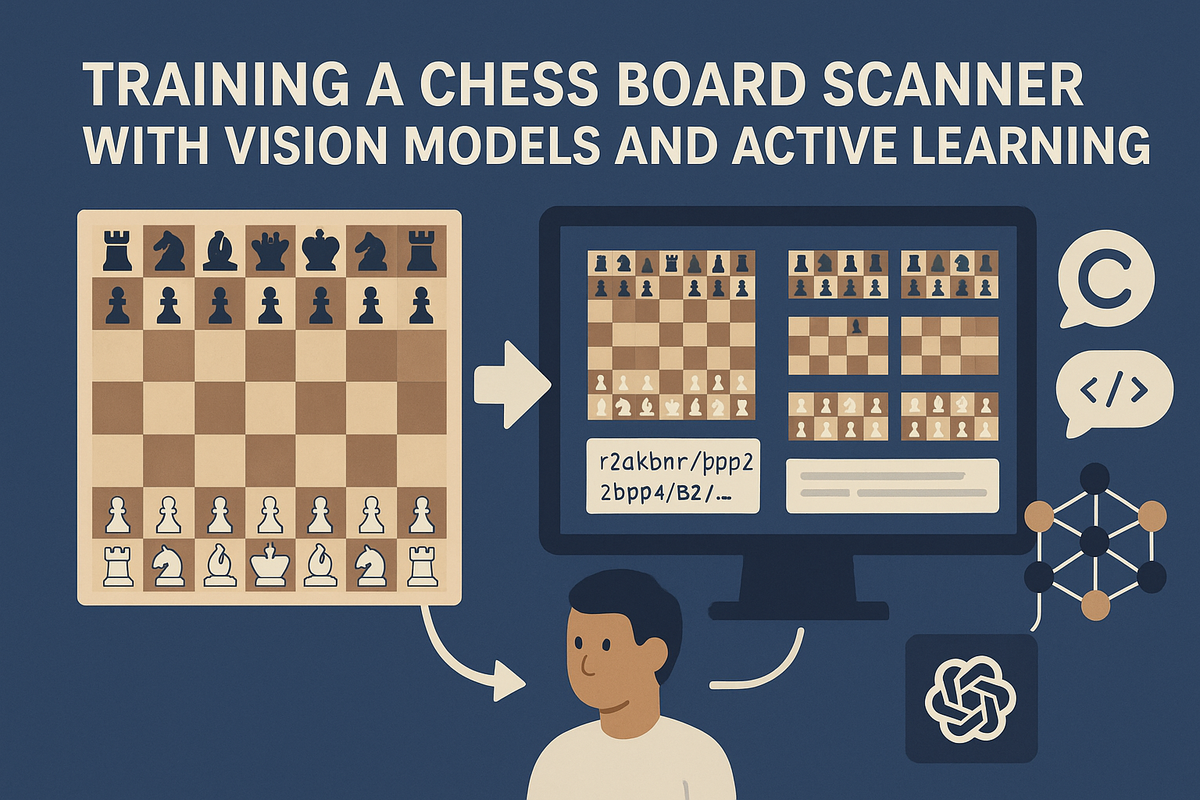

I knew there were a few relatively inexpensive or free chess board-to-virtual-board scanners on the market that could help me digitize positions from my chess books. But I wondered: how hard could it really be to leverage an LLM to help train a custom model? After all, vision-language models have become remarkably capable at understanding images.

The Solution: AI-Assisted Model Development with Active Learning

It took some trial and error, but using an LLM to assist with the development process dramatically reduced the complexity of building a machine learning model from scratch. This freed me up to focus on what I do best: human-in-the-loop refinement. The core insight was simple yet powerful: what if we trained a base vision model and then used Claude + Copilot to generate a web interface that could serve as a visual training ground for iteratively refining the model?

The Training Pipeline

The training process worked like this:

- Initial Model Training with Synthetic Data: We started with a base vision model, but instead of relying solely on standard datasets, we used Copilot and Claude to programmatically generate hundreds of synthetic chess board images. This synthetic data generation included:

- Different board colors and visual styles to ensure the model wasn't overfitting to a single aesthetic

- Multiple piece representations with varying appearances, cuts, and angles

- Slight perspective skews and rotations to simulate real-world photo variations

- A blend of synthetic images combined with real chess.com screenshots and photographs from chess books

This hybrid approach—mixing synthetic and real data—is a powerful technique called data augmentation. Rather than manually photographing and labeling thousands of board positions, we let the AI generate realistic variations, dramatically reducing manual labeling effort while improving the model's robustness to different visual conditions. Vision models typically use convolutional neural networks (CNNs) or transformer-based architectures like Vision Transformers (ViT) to extract spatial features from these diverse chess board images.

-

Web Interface for Active Learning: I had an AI assistant create an interactive web application that generated 10+ random board positions on screen simultaneously. This approach leveraged active learning—a machine learning technique where the model identifies which samples it's least confident about, and the human annotator focuses correction effort on those high-impact examples.

-

Iterative Refinement Loop: For each board position displayed, the model would output its best prediction of the FEN (Forsyth-Edwards Notation—the standard notation for encoding a chess position). I could then click directly on pieces in the UI that the model got wrong, providing corrective feedback. This is essentially reinforcement learning from human feedback (RLHF).

-

Generalization: Over multiple iterations, the model gradually improved its ability to recognize pieces, their positions, and board orientation from real photographs taken from chess books, eventually reaching a point where it could reliably generalize to any image of a chess position.

Why This Approach Works: Key ML Concepts

This training strategy combines several powerful machine learning techniques:

- Transfer Learning: Rather than training from scratch, we built on a pre-trained model, leveraging features it already learned from large datasets

- Active Learning: Instead of randomly labeling more data, we focused on examples the model was uncertain about

- Human-in-the-Loop: This hybrid approach combines the pattern recognition strength of neural networks with human domain expertise and visual judgment

- Iterative Fine-Tuning: Each correction feedback loop slightly adjusts the model's weights, gradually reducing prediction errors

The beauty of this approach is that it required far fewer labeled examples than traditional supervised learning, while being more efficient than pure manual annotation.

Try the Result

This training methodology powers our Chess Scanner AI + GPT app, which combines computer vision board recognition with strategic AI analysis. The model we trained can now accurately convert photographs of real chess boards into FEN positions, whether from chess books or online screenshots.

Download Chess Scanner AI + GPT on Google Play to see the trained model in action. Photograph any chess position and get instant position analysis with both tactical precision and strategic insight.